Practical Guide: How to Build a Web Scraper with Laravel Actions

In this walkthrough, we’ll build a simple web scraper from scratch

It is possible to scrape and parse almost any kind of data that is available online. This sample will show extracting data from JSON, which is probably the simplest. Some packages help parse other formats like HTML, XML, PDF, CSV, Excel, and even images with the help of AI.

In The Netherlands, there is a website (https://www.verlorenofgevonden.nl) that tracks lost and impounded bicycles. With this project, we will fetch the relevant data found on this site to do some analytics on the raw data.

Getting started

If you are familiar with this part you can skip it. We’ll just follow the official documentation to create a Laravel project with Docker. I’m doing this on MacOS, so please refer to the documentation, as your instructions might be different for Linux or Windows.

Create the project

Navigate to your project directory and create a new project. It might take a while as it will pull and build the containers locally.

curl -s "https://laravel.build/laravel-scraper" | bashStart the environment

Navigate to the new directory and start the environment. It is also advised to create an alias, see the docs.

cd laravel-scraper && sail up -d --build

# if you get a response like "command not found" you should

# learn to do as advisedOnce the application’s Docker containers have been started, you can access the application in your web browser at http://localhost. You should see the default landing page.

Dependencies

We’ll use a few basic dependencies to help us get started.

Laravel Actions

For this project, I decided to experiment with Laravel Actions. It is not required but seems like the right tool for the job.

sail composer require lorisleiva/laravel-actionsGuzzle HTTP client

Laravel provides an expressive, minimal API around the Guzzle HTTP client, allowing you to quickly make outgoing HTTP requests to communicate with other web applications.

sail composer require guzzlehttp/guzzleLaravel Pint (optional)

Laravel Pint is an opinionated PHP code-style fixer for minimalists. Pint is built on top of PHP-CS-Fixer and makes it simple to ensure that your code style stays clean and consistent.

sail composer require laravel/pint --devThe core logic

Fetching data from a website is quite simple. You can follow some basic steps to apply the approach to your use case.

- Browse the site manually.

- Inspect the network log to identify how the website fetches the data, ideally, you will find some API requests to target directly.

- Replicate the requests manually with a tool like Postman to figure out the flow, parameters, and shape of the data.

The schema

For the sake of this guide, we’ll keep the schema simple by just creating a single Bicycle model for the data. Generate the model and migration in your terminal with the following command.

sail artisan make:model Bicycle --migrationFile: app/Models/Bicycle.php

class Bicycle extends Model

{

protected $fillable = [

'object_number',

'type',

'sub_type',

'brand',

'color',

'description',

'city',

'storage_location',

'registered_at',

];

protected $casts = [

'registered_at' => 'datetime',

];

}File: database/migrations/create_bicycles_table.php

return new class extends Migration

{

public function up(): void

{

Schema::create('bicycles', function (Blueprint $table) {

$table->id();

$table->string('object_number', 32)->unique();

$table->string('type', 32);

$table->string('sub_type', 32);

$table->string('brand', 32);

$table->string('color', 32);

$table->text('description');

$table->string('city', 32);

$table->string('storage_location', 64);

$table->dateTime('registered_at');

$table->timestamps();

});

}

public function down(): void

{

Schema::dropIfExists('bicycles');

}

};Run the migration/s

sail artisan migrateThe scraper

We’ll create a single class with Laravel Actions to keep the required logic contained. The sample command will focus on fetching the most basic data. You can always extend this functionality and go as deep as you need to.

For example, you could extrapolate the unique “frame number” from the description to check how many times a single bicycle has been impounded.

File: app/Actions/Scrapers/FetchBicycles.php

class FetchBicycles

{

use AsAction;

public string $commandSignature = 'scraper:fetch-bicycles';

public string $commandDescription = 'Fetch impounded bicycles from www.verlorenofgevonden.nl';

public function asCommand(Command $command): void

{

$this->handle($command);

}

public function handle(Command $command): void

{

$this->fetch($command, now()->subMonth(), now());

}

private function fetch(

Command $command,

Carbon $dateFrom,

Carbon $dateTo,

int $from = 0

): void {

$response = Http::acceptJson()->get('https://verlorenofgevonden.nl/scripts/ez.php', [

'site' => 'nl',

'q' => 'fietsendepot',

'date_from' => $dateFrom->format('d-m-Y'),

'date_to' => $dateTo->format('d-m-Y'),

'timestamp' => now()->timestamp,

'from' => $from,

]);

$hits = collect($response->json('hits.hits'));

if ($hits->isEmpty()) {

$command->info('Done processing');

return;

}

$upserts = collect();

foreach ($hits as $hit) {

$registeredAt = Carbon::parse(data_get($hit, '_source.RegistrationDate'));

$upserts->push([

'object_number' => data_get($hit, '_source.ObjectNumber'),

'type' => data_get($hit, '_source.Category'),

'sub_type' => data_get($hit, '_source.SubCategory'),

'brand' => data_get($hit, '_source.Brand'),

'color' => data_get($hit, '_source.Color'),

'description' => data_get($hit, '_source.Description'),

'city' => data_get($hit, '_source.City'),

'storage_location' => data_get($hit, '_source.StorageLocation.Name'),

'registered_at' => $registeredAt,

]);

}

Bicycle::upsert($upserts->toArray(), ['object_number'], [

'type',

'sub_type',

'brand',

'color',

'description',

'city',

'storage_location',

'registered_at',

]);

$total = $from + $upserts->count();

$command->info(sprintf('Processed %d results', $total));

$this->fetch($command, $dateFrom, $dateTo, $total);

}

}File: app/Console/Kernel.php

class Kernel extends ConsoleKernel

{

protected $commands = [

FetchBicycles::class,

];

// ...

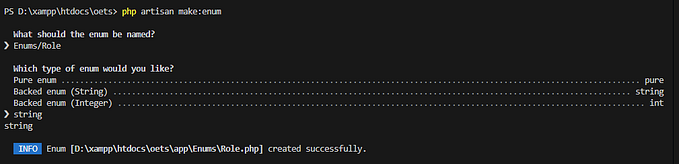

}Run the command

sail artisan scraper:fetch-bicycles

Deeper analysis of historical data

After my bicycle was impounded for the second time in a few weeks, I was inspired to check the stats by scraping data from the site. To be fair, I parked my bicycle illegally on both occasions.

The findings were quite interesting and I generated the following charts from the scraped data. Note that these graphs were generated on 2022–10–01.

Idea: Bicycle auction app

I believe there is a gap in the market in The Netherlands with impounded bicycles. The website helps the community to find their lost or impounded bicycles and allows you to have them delivered. At a fee for the penalty and delivery.

The bicycle depots in The Netherlands are filling up with thousands of bicycles. Many people never claim their impounded bicycles, causing a constant build-up of unwanted and unclaimed bikes.

Someone should create a simple application to put these abandoned bicycles up for auction. To fetch the data is quite simple, as proved in this guide. To close the loop allow the owners to set a minimum bid and/or deadline. The owner could then send the keys through the post to the buyer upon a successful bid, and the standard delivery process can be used to deliver the bicycle to the new owner.